Claude Code: First impressions

June 4, 2025

I made some serious strides with my “vibe-to-learn-to-code” project. In half a day. I did this with Claude Code.

I watched the video; I read quite a few things about it; but they didn’t entirely prepare me for the experience. As always, AI progress moves so quickly that it’s hard not to be astonished when you experience a step change in capability.

Prior to this, I’d used Aider for some coding automation, as well as interacting directly with both ChatGPT and Claude via their standard chat interfaces. Those experiences were powerful enough; I built this website from scratch using Claude with artifacts.

Agentic coding is not entirely new, but it’s really become mainstream within the past year, if not the past six months. Here are some of my initial thoughts.

Project goals

I’m experimenting with teaching novices how to code by creating art in p5.js. The experience is predicated on a vibe coding approach, with an integrated chat window and automatic code insertion from an AI agent. I want to prototype quickly.

In an ideal world, I’d have a way to attach one’s LLM of choice, but I haven’t seen a way to do that. Because this is a prototype, I’m avoiding setting up accounts and payments to fund my own API key, and instead planning to integrate a local model or a free service if I can find one.

I’m not a web developer, so I have limited knowledge of HTML, CSS, and JavaScript, much less the modern front-end frameworks like React and Tailwind. That means I’ll be relying heavily on Claude’s knowledge of the technology, and trusting my own general programming expertise for reviewing code and finding bugs.

Setup and UI

It was very easy to get going with Claude Code. I had already set up an API key in my account so I could work with Anthropic models in Aider; that process itself is quite easy. Setting up my agent was a couple of command-line invocations and an interactive configuration process.

Everything happens in the terminal by default. That suits me fine, apart from the minor annoyances of editing prompts on the command line (which I could mitigate by moving them into files). There’s a small set of commands, but mostly I’m just typing just the way I do in a typical LLM chat window, and watching its output.

Working

I gave the agent access to the files inside my project folder, and then wrote a very brief readme file describing the project:

Vibe Coding Instructor

A tool that teaches novices how to code, using an LLM-first approach.

Approach

Learners use a chat interface to code in a P5.js environment. They begin by writing prompts to create projects, and gradually learn how to read and modify generated code. Over time, they learn enough to begin writing code of their own.

The system tracks the learner’s current coding level, and always generates the appropriate code to avoid overloading too many unfamiliar concepts. The learner drives the process, pushing their discovery in accordance with their goals.

Design

The system has an ontology that defines concepts, learning objectives, and goals. It tracks the learner’s progress and knows which concepts they understand, those they’re focused on learning, and those that are beyond their reach.

The LLM uses the ontology when generating code, and the system verifies the generated code to ensure that it doesn’t contain any bits of P5.js API that violate the above constraints, looping back to LLM code generation when necessary.

The interface runs locally in a web browser and relies on a bundled small local model. It has an editor, an LLM chat area, and a rendering canvas. When the LLM proposes changes to the code, it presents them in a diff format that learners can understand, and each diff has a clickable annotation that explains what it does. The user can partially apply diffs and experiment with the changes.

I asked it to create a detailed plan based on this description. It delivered, creating a four-phase plan with implementation details and deliverables.

I’ve proceeded one phase at a time. For each phase, I ask Claude to generate a detailed implementation plan first. I review the plan and make adjustments, and then set it loose to write code. I can watch the work scroll by in the terminal window. At times it pauses to ask for permission to do potentially dangerous things like write files, run commands, or download things from the web, which I can approve one-by-one, or give a blanket approval for all future such requests. Eventually it stops, I review the code and test the UI, and we iterate on finding and fixing bugs.

Phase 1

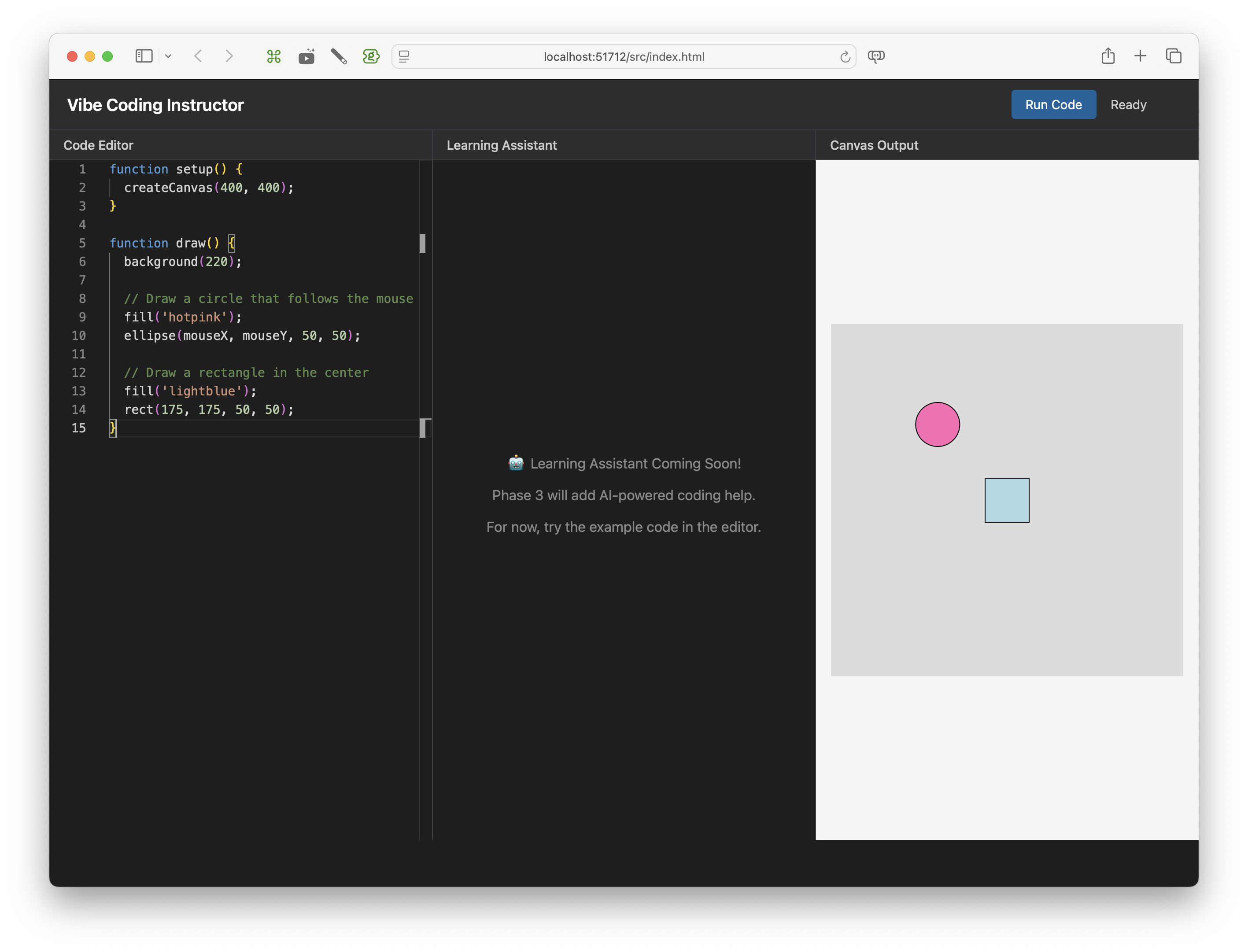

First, I revised my overall plan, opting for the simplest possible solution—for example, I nixed React and Tailwind. I figure the fewer fancy tools I need to use, the less trouble I’ll get into. After that, I got the basic UI up and running: a code editor, a p5 canvas, and a placeholder area for AI chat. I’m not exaggerating when I say that Claude had a working implementation up in minutes.

Doing even a basic version of this from scratch would have taken me at least a week—reading documentation, following tutorials, getting familiar with the required components such as the Monaco code editor. And Claude implemented live coding: when I edit the code on the left, there’s no need to press the Run Code button—the canvas updates automatically on the right. That’s something I wouldn’t even have attempted. I can read the code and basically see how it works, but it’s a level of JavaScript I don’t possess.

Phase 2

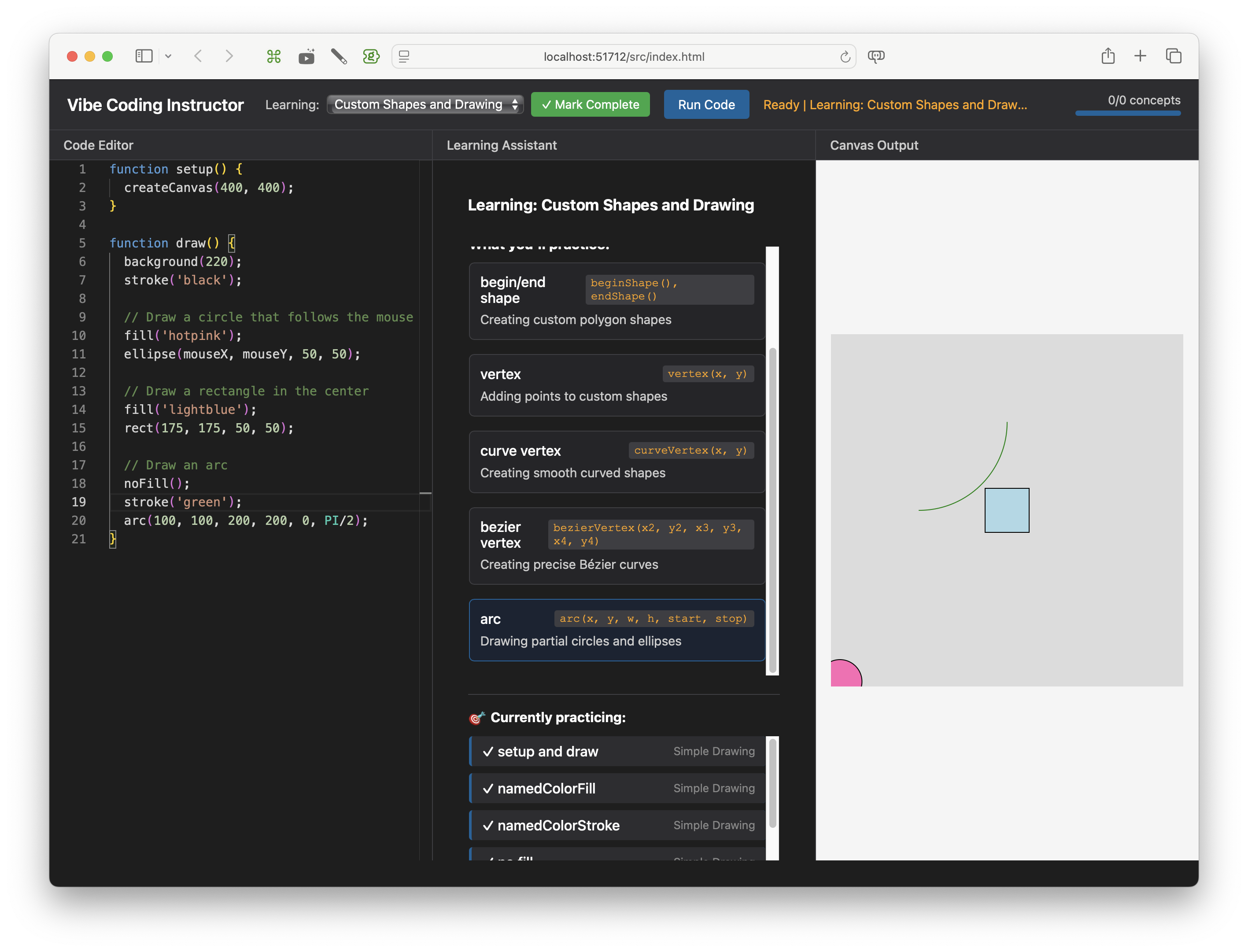

Next, I completed the internal knowledge system that understands the coding concepts and their dependencies, so that I’ll be able to constrain the coding assistant to match the learner’s current level of knowledge. I attached that to the coding window so it could detect what the learner is doing, and created a simple UI to visualize it.

This was also quite fast, though it required more debugging work. I also needed to do more work in the planning phase to correct some architectural decisions. In all, I probably spent between two and three hours.

Phase 3

I’m working on the LLM integration now. This is decidedly trickier; I’m currently failing to load a local LLM model. But if the first two phases are any indication, I’m guessing it should only take a day at most to iron things out—assuming that there are no fundamental flaws in my plan.

Cost

All of this has, so far, cost me less than $20. I’m predicting that an entire prototype of the app will come in well under $100. This is multiple orders of magnitude smaller than it would have cost me before LLM-assisted coding.

Overall thoughts

I felt like I was working with a somewhat scatterbrained, overconfident, but knowledgeable front-end developer with somewhere between junior and mid-level experience. I’m sure if I had more knowledge and could provide more detailed guidance, I could probably improve my experience.

It’s hard to overestimate the impact that coding agents will have on software development. My hope is that this democratizes bespoke software development in much the same way that apps like GarageBand gave a music studio to everyone with access to a computer or smartphone. Just like making music, the more you know about the process and the underlying art, the more you can create. But even those who dabble can find joy in the making. And not too far beyond that is an uncountable number of little tools and works of art—each one unique to the person who created it, and perhaps only for that person.

I’ll have plenty more to write as I continue my project. What an exciting time to be a programmer!